Table of Contents

Introduction

Artificial intelligence stands at a critical juncture in healthcare, holding the dual promise of revolutionary breakthroughs and significant peril. On one hand, AI offers the potential to accelerate diagnostics, personalize treatments, and streamline clinical workflows. On the other, its adoption is fraught with challenges related to patient data privacy, the high cost of proprietary systems, and equitable access. Into this complex landscape, Google has introduced MedGemma, a strategic response aimed at navigating these obstacles.

Announced as part of its Health AI Developer Foundations (HAI-DEF) initiative, MedGemma is not just another large language model. It is a family of powerful, open, and specialized models meticulously designed to democratize and accelerate AI development within the medical field. By providing these tools openly, Google aims to empower researchers, developers, and healthcare institutions to build privacy-preserving, customizable, and efficient AI applications.

This article will provide a comprehensive analysis of the MedGemma collection, exploring its technical architecture, model variants, practical applications, and the profound implications of its open-source approach for the future of healthcare AI.

The MedGemma Collection: Models, Architecture, and Capabilities

The MedGemma collection represents a significant step beyond general-purpose AI, offering a suite of models specifically engineered for the nuances of medical data. This section provides a detailed technical breakdown of the MedGemma family, explaining what each model does, how it is built, and its benchmarked performance.

A Family of Specialized Models

Google has structured the MedGemma collection to cater to a range of computational needs and use cases, from lightweight image classification to complex multimodal reasoning. The family consists of several key variants:

- MedGemma 4B Multimodal: This 4-billion parameter model is positioned as the balanced, resource-efficient workhorse for many image analysis tasks. It is capable of running on a single GPU and even adaptable for mobile hardware. It is available in two forms: a pre-trained version

-pt) for researchers who need to conduct deep experimentation, and an instruction-tuned version-it) which serves as a better starting point for most ready-to-build applications . - MedGemma 27B (Text-only &; Multimodal): At the higher end of the spectrum are the 27-billion parameter models. The

text-onlyvariant is optimized for pure clinical reasoning, medical text comprehension, and tasks like summarizing patient notes. Themultimodalversion, announced in July 2025, is the powerhouse of the collection, designed for complex tasks that involve interpreting both images and longitudinal Electronic Health Record (EHR) data . - MedSigLIP: Distinct from the generative MedGemma models, MedSigLIP is a crucial, lightweight vision encoder derived from SigLIP. It is not designed to generate text but to power MedGemma’;s image understanding capabilities. Its specific use cases include efficient, structured-output tasks like zero-shot image classification, semantic search, and content-based image retrieval across large medical databases .

Technical Foundations: Built on Gemma 3, Tuned for Medicine

The entire MedGemma family is built upon the foundation of Google’s Gemma 3 architecture, inheriting its state-of-the-art features such as computational efficiency and a large context window . However, the true power of MedGemma lies in its extensive medical specialization.

The model’;s exceptional capability in understanding medical imagery stems from its vision encoder. The SigLIP component was specifically pre-trained on a vast and diverse corpus of de-identified medical data. This dataset includes chest X-rays, dermatology images, ophthalmology images, and histopathology slides, giving the model a deep, built-in understanding of medical visual patterns .

Complementing its visual acuity, the Large Language Model (LLM) component was trained on a diverse set of medical sources. This includes medical texts, extensive medical question-answer pairs, and, for the 27B multimodal variant, structured FHIR-based EHR data. This dual-pronged training regimen—specialized vision and specialized text—is what enables MedGemma to perform complex reasoning across different data types.

Performance and Efficacy

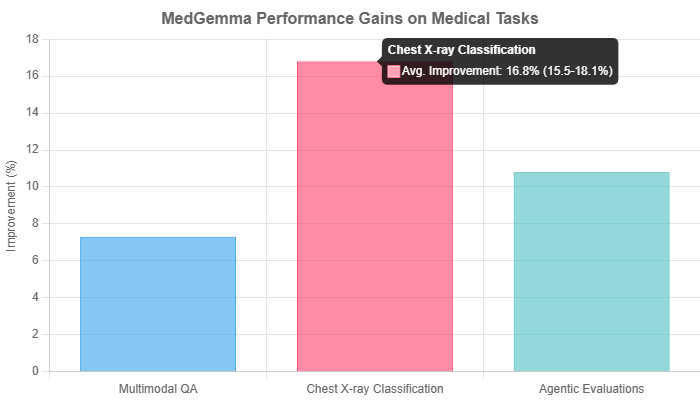

The efficacy of this specialized training is validated by rigorous benchmarking. According to the official technical report, MedGemma models significantly outperform similar-sized generalist models on a range of medical tasks, often approaching the performance of models specifically fine-tuned for a single task while retaining general capabilities.

The performance gains are substantial. For out-of-distribution tasks, MedGemma demonstrates remarkable improvements over its base Gemma 3 models. These results underscore the value of domain-specific pre-training for achieving high performance in specialized fields like medicine.

Furthermore, the power of MedGemma is amplified through fine-tuning. The technical report highlights that further training on specific subdomains can yield dramatic improvements. For instance, fine-tuning was shown to reduce errors in electronic health record information retrieval by 50% and achieve performance comparable to state-of-the-art specialized methods for tasks like pneumothorax classification . This demonstrates that MedGemma serves as a powerful and adaptable foundation for building highly accurate, specialized medical AI tools.

From Theory to Practice: Applications and Developer Ecosystem

While technical specifications are impressive, the true measure of MedGemma’;s value lies in its practical utility. This section bridges the gap between model capabilities and real-world application, showcasing how developers and researchers can leverage the MedGemma collection and the resources available to support them.

Transforming Clinical Workflows: Key Use Cases

MedGemma is designed to be a versatile tool adaptable to a wide array of clinical and research scenarios. Key use cases include:

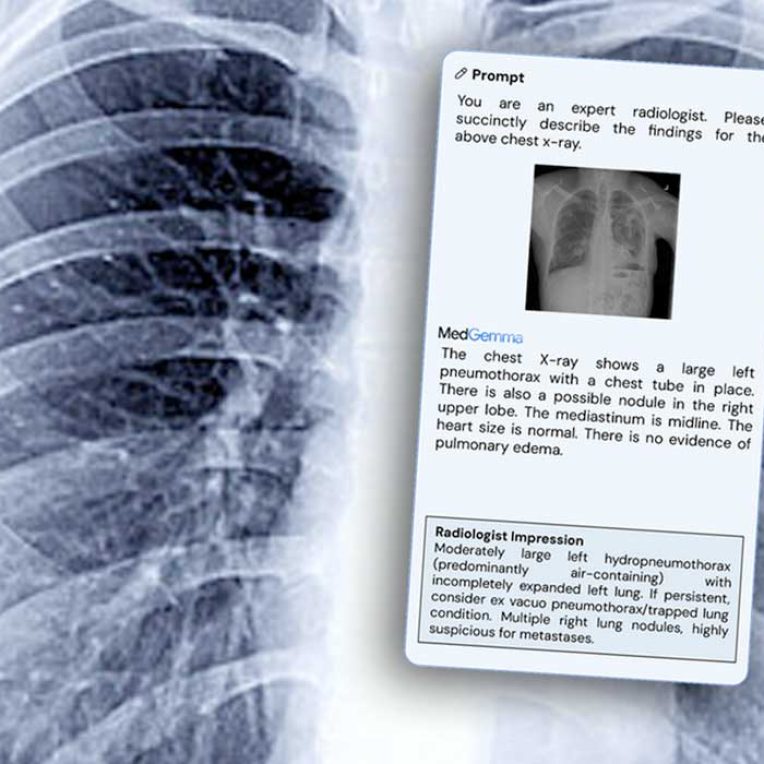

- Image Analysis & Reporting: A primary application is the generation of free-text reports from medical images. This is particularly useful in fields like radiology and pathology, where MedGemma can analyze an image (e.g., a chest X-ray) and generate a descriptive summary. It also excels at visual question answering (VQA), allowing clinicians to ask natural language questions about an image .

- Clinical Reasoning &; Support: The powerful text comprehension of the 27B models makes them suitable for tasks requiring deep medical knowledge. This includes assisting with patient triage, providing clinical decision support by referencing guidelines, summarizing lengthy patient notes into concise overviews, and answering complex medical questions posed by healthcare professionals.

- Data Retrieval & Management: Leveraging the MedSigLIP encoder, developers can build systems for semantic search across vast medical image archives. This allows for finding visually or semantically similar images without relying on manual tagging. The models can also be fine-tuned for efficient information extraction from unstructured EHR data.

Real-World Implementations (Case Studies)

Early adoption has already demonstrated MedGemma’s potential in diverse global settings:

- askCPG (Malaysia): Developers created an application to help medical professionals in Malaysia navigate the country’;s 121 Clinical Practice Guidelines (CPGs). The tool uses MedGemma to interpret uploaded medical photos, combining the image findings with user queries to quickly locate relevant information within the extensive guidelines .

- Tap Health (India) & Chang Gung Hospital (Taiwan): These examples highlight the model’;s reliability and multilingual capabilities. Developers at Tap Health in India noted the model’s effectiveness in summarizing progress notes and suggesting guideline-aligned recommendations. Meanwhile, researchers at Chang Gung Memorial Hospital in Taiwan successfully used MedGemma with traditional Chinese-language medical literature, demonstrating its utility in non-English clinical settings .

The Developer’s Toolkit: How to Get Started

Google has fostered a comprehensive ecosystem to support developers in adopting MedGemma. Access is streamlined through several primary channels:

- Model Hubs: The models are readily available on platforms like Hugging Face and Google Cloud’s Model Garden. Access on Hugging Face is gated, requiring users to agree to terms, with approval being instantaneous.

- GitHub Repository: The official Google-Health/medgemma repository is the central hub for the community. It contains crucial resources, including quick-start and fine-tuning notebooks (in Colab), supporting code, and forums for community engagement via GitHub Discussions and Issues.

- Getting Started: The setup process is designed to be straightforward for those familiar with modern AI frameworks. It typically involves installing the

transformerslibrary and using provided code snippets to load the model and processor, as detailed in the model cards and developer documentation.

The Strategic Shift: Why Open Models are a Game-Changer for Healthcare

Google’s decision to release MedGemma as an open-source family of models is more than a technical contribution; it represents a strategic pivot that addresses some of the most entrenched challenges in healthcare AI. This move has profound implications for data privacy, model customization, and the overall pace of innovation in the industry.

Addressing the Privacy Imperative

Perhaps the most significant advantage of MedGemma’s open model approach is its direct answer to the privacy imperative in healthcare. The industry is governed by strict regulations (like HIPAA) and a deep-seated need to protect sensitive patient information. Proprietary, cloud-based AI models often require data to be sent to third-party servers, creating significant privacy and security concerns.

MedGemma circumvents this issue by enabling data sovereignty. Because the models can be downloaded and run on local infrastructure, hospitals, research labs, and healthcare companies can keep all patient data securely behind their own firewalls. This local deployment model eliminates reliance on external APIs for inference, giving institutions full control over their data and infrastructure . This approach aligns with the growing demand for tools that prioritize privacy and allow for integration with existing systems without external data sharing .

Enabling Customization and Transparency

Healthcare is not monolithic. A model that performs well on one patient population may not be as accurate for another due to demographic, genetic, or environmental differences. Open models empower developers to fine-tune MedGemma for their specific clinical needs and patient populations. This is critical for improving accuracy and, crucially, for mitigating bias.

This stands in stark contrast to “black box” API models, where the inner workings are opaque. The open nature of MedGemma allows for greater auditability and transparency. Researchers and local teams can better understand the model’;s decision-making process, audit it for biases relevant to their community, and build more trustworthy systems . This transparency is fundamental to building confidence among clinicians and patients alike.

Democratizing AI Innovation

By providing state-of-the-art models without expensive API fees, Google is fundamentally changing the “;economics of experimentation” in medical AI. The high cost of inference from proprietary models can be a significant barrier to entry, particularly for academic researchers, startups, and healthcare systems in lower-resource settings.

MedGemma levels the playing field. The ability to run a powerful, multimodal model on a single GPU lowers the financial and infrastructural barriers to innovation. This democratization fosters a more vibrant, diverse, and competitive ecosystem, enabling a broader range of players to contribute to the development of next-generation healthcare solutions .

Navigating the Risks: Responsibility, Bias, and the Path to Deployment

While MedGemma’s potential is immense, its deployment is not without significant challenges and ethical considerations. A balanced perspective requires acknowledging its limitations and proactively addressing the risks associated with bias, liability, and patient safety. Grounding the excitement in a realistic understanding of these hurdles is crucial for responsible innovation.

Acknowledging the Limitations: Not a Plug-and-Play Solution

It is critical to understand that MedGemma is a foundation model, not a clinical-grade, off-the-shelf product. Google explicitly states that while its baseline performance is strong, it is not yet “clinical-grade” and will likely require further fine-tuning and validation before deployment in a production environment .

Developers and institutions must undertake rigorous testing in their specific clinical contexts to ensure the model’s safety and efficacy. Furthermore, practical challenges remain. Deploying these models at scale can demand significant computational resources, including high-performance GPUs and robust server infrastructure, which can be costly and complex to maintain .

The Ethical Minefield: Bias and Liability

The most pressing ethical challenge is the “bias in, bias out” problem. AI models are trained on data, and if that data reflects existing healthcare disparities, the model can perpetuate or even exacerbate them. A model trained predominantly on data from one demographic may perform suboptimally for minority groups, leading to inequitable or incorrect clinical outcomes . This underscores the need for diverse training datasets and thorough bias evaluation during development and deployment.

This leads to unresolved questions of legal liability. If an AI-assisted diagnosis is incorrect and leads to patient harm, who is responsible—the developer, the hospital, or the clinician who followed the suggestion? A World Economic Forum report highlighted that 80% of healthcare leaders are concerned about this lack of clarity . Without defined regulatory and ethical guidelines, the deployment of AI in clinical practice carries significant risk, making robust human oversight an essential safeguard.

Google’s Framework for Responsible AI

Google contextualizes MedGemma within its broader, long-standing commitment to responsible AI. This commitment is articulated through its AI Principles, annual Responsible AI Progress Reports, and technical frameworks like the Secure AI Framework (SAIF) for security and privacy.

Several proactive measures have been taken in the development of MedGemma to align with these principles. The training process relies heavily on de-identified medical data to protect patient privacy . Furthermore, Google provides tools as part of its Responsible Generative AI Toolkit, such as the Learning Interpretability Tool (LIT) and LLM Comparator, which help developers investigate model prompts and qualitatively assess their models for fairness and safety . This ecosystem of tools and principles is designed to guide developers toward building safer and more equitable applications on top of the MedGemma foundation.

Conclusion: Charting the Future of Collaborative Health AI

MedGemma represents a pivotal moment in the evolution of medical technology. It marks the convergence of state-of-the-art multimodal AI with an open, accessible, and privacy-conscious distribution model. By placing powerful, specialized tools directly into the hands of developers, researchers, and healthcare institutions, Google is not just releasing a new model; it is providing a foundational catalyst for a new wave of innovation.

The strategic decision to prioritize local deployment and customization directly addresses the core industry challenges of data sovereignty and the need for adaptable, transparent systems. This approach has the potential to lower the barrier to entry, fostering a more diverse and dynamic ecosystem where solutions can be tailored to specific community needs, rather than being dictated by one-size-fits-all proprietary systems.

However, the path forward requires caution and diligence. The ultimate success of MedGemma and similar models will depend not just on their technical power, but on a concerted, collaborative effort. Developers must commit to rigorous validation, clinicians must maintain critical oversight, and regulators must work to establish clear guidelines for safety and liability. The challenges of bias and equity are not merely technical problems but societal ones that demand continuous attention.

In conclusion, MedGemma is more than a collection of models; it is an invitation to the global healthcare community to build together. It provides the foundational tools to create a more intelligent, equitable, and accessible future for healthcare, but it is the responsible and innovative application of these tools that will truly define its legacy.

Reference

[1]

[2507.05201] MedGemma Technical Report – arXiv

[2]

google-gemini/gemma-cookbook: A collection of guides … – GitHub

[3]

Google launches MedGemma for healthcare app developers

http://www.mobihealthnews.com/news/google-launches-medgemma-healthcare-app-developers

[4]

Google’s open MedGemma AI models could transform healthcare

[5]

MedGemma model card | Health AI Developer Foundations

[6]

MedGemma: Our most capable open models for health AI …

[7]

MedGemma | Health AI Developer Foundations

[8]

Google Releases MedGemma: Open AI Models for Medical Text and …

[9]

Analyze Medical Images with MedGemma — A Technical Deep Dive

[10]

MedGemma – Vertex AI – Google Cloud console

[11]

MedGemma Technical Report – arXiv

[12]

20 Pros & Cons of MedGemma by Google Deepmind [2025]

[13]

MedGemma

[14]

Google-Health/medgemma – GitHub

[15]

Sharing our product integration with MedGemma – askCPG

[16]

MedGemma Technical Report – arXiv

[17]

Inside Google’s MedGemma Models for Healthcare AI – AI Magazine

[18]

Get started with MedGemma | Health AI Developer Foundations

[19]

Google Launches MedGemma for Healthcare AI Application … – HLTH

[20]

The Human Algorithm: Confronting Bias, Safety, and Governance in …

[21]

Bias in medical AI: Implications for clinical decision-making – PMC

[22]

AI pitfalls and what not to do: mitigating bias in AI – PMC

[23]

Mistral 3.1 vs Gemma 3: A Comprehensive Model Comparison

[24]

Responsible Generative AI Toolkit | Google AI for Developers

[25]

Gemma explained: What’s new in Gemma 3

[26]

MedGemma Opens the Next Chapter in Health AI

[27]

‘Bias in, bias out’: Tackling bias in medical artificial intelligence

[28]

Gemma 3: Google’s all new multimodal, multilingual, long …

[29]

Responsible AI: Our 2024 report and ongoing work

[30]

AI Principles – Google AI