The year 2025 has been pivotal for artificial intelligence in healthcare, marked by a significant shift from proprietary, black-box systems to powerful, open foundation models. At the forefront of this movement is Google’s MedGemma, a family of medically-tuned AI models released earlier this year. This guide provides a comprehensive overview of MedGemma’s architecture, capabilities, practical applications, and its place in the rapidly evolving healthcare AI landscape of 2025.

Table of Contents

1. The Core Architecture: What Makes MedGemma Tick?

MedGemma is not merely a general-purpose model with a medical vocabulary. It is a sophisticated suite built from the ground up for medical fluency, combining a state-of-the-art language model with a specialized vision component.

Foundation on Gemma 3

At its core, MedGemma is built upon the powerful and efficient architecture of Gemma 3, which itself is derived from the research behind the Gemini models. It utilizes a decoder-only transformer architecture, a design inherently optimized for generative tasks like producing coherent text for medical reports or answering complex clinical questions. Key features inherited from Gemma 3 include a very long context window, allowing the model to process extensive medical histories or research papers in a single pass, a crucial capability for comprehensive clinical analysis. This architectural choice is fundamental to its generative prowess.

The Vision Engine: MedSigLIP

The cornerstone of MedGemma’s multimodal capabilities is MedSigLIP, a 400-million-parameter vision encoder specifically tuned for the medical domain. Derived from Google’s SigLIP (Sigmoid-based Language-Image Pre-training) model, MedSigLIP underwent extensive pre-training on a massive, diverse corpus of de-identified medical imagery. This wasn’;t just a fine-tuning exercise; it was a deep specialization process using data from multiple modalities, including:

- Chest X-rays

- Dermatology photographs

- Ophthalmology fundus images

- Digital histopathology slides

This specialized training makes MedSigLIP a powerful tool in its own right. As noted in Google’s documentation, it is recommended for tasks that don’t require text generation, such as data-efficient classification, zero-shot classification, or semantic image retrieval from large medical databases.

2. The MedGemma Model Family: Variants and Use Cases

Google released MedGemma in several variants throughout 2025, allowing developers to choose the optimal model based on their specific needs for performance, modality, and computational resources. The family is primarily divided into 4-billion and 27-billion parameter sizes.

May 20-22, 2025

Initial Launch at Google I/O: Google announces MedGemma and releases the first models: MedGemma 4B (multimodal) and MedGemma 27B (text-only). This marks the official debut of the open-source suite.

July 7, 2025

Technical Report Published: The first version of the “MedGemma Technical Report” is submitted to arXiv, providing deep insights into the models’ architecture, training, and benchmark performance. The report details significant performance gains over base models.

July 9, 2025

Flagship Model Released: Google releases the MedGemma 27B multimodal instruction-tuned model, completing the initial collection. This version integrates image, text, and electronic health record (EHR) data capabilities. This release provides the most comprehensive capabilities in the family.

Here is a detailed breakdown of the available models:

| Model Variant | Parameters | Modalities | Key Features & Intended Use |

|---|---|---|---|

MedGemma 4B-it / -pt | 4 Billion | Image & Text | A workhorse model balancing performance and resource efficiency. The instruction-tuned (-it) version is the recommended starting point for most applications, while the pre-trained (-pt) version is for researchers needing deeper control. Ideal for image analysis and visual question answering. |

MedGemma 27B Text-Only-it | 27 Billion | Text | Optimized exclusively for medical text comprehension. It exhibits slightly higher performance on text-only benchmarks. Best for tasks like summarizing EHRs, querying medical literature, or analyzing clinical notes. Only available in an instruction-tuned version. |

MedGemma 27B Multimodal-it | 27 Billion | Image, Text, EHR Data | The most powerful and comprehensive model. It integrates longitudinal EHR data, allowing it to connect imaging with a patient’s full medical history for deep contextual reasoning. This model represents a significant leap in multimodal medical AI. |

3. Performance Benchmarks and Improvements

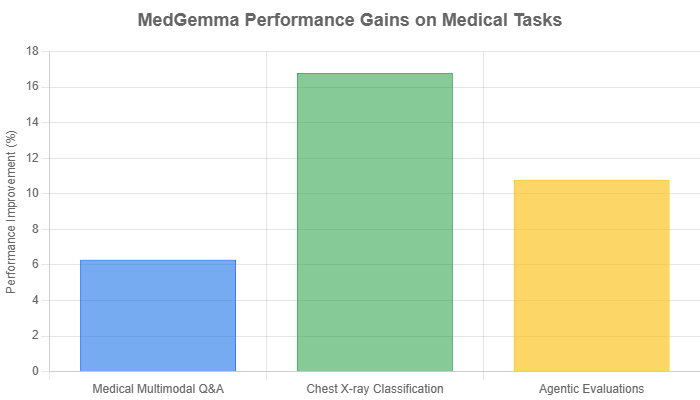

The MedGemma technical report provides concrete data on its capabilities. Compared to the base Gemma 3 models it was built upon, MedGemma demonstrates substantial improvements on a range of out-of-distribution medical tasks, showcasing the value of its specialized tuning.

Chart data sourced from the MedGemma Technical Report, showing performance improvement over base models.

Key performance highlights from the report include:

- Fine-tuning Efficacy: Fine-tuning MedGemma on specific subdomains yields dramatic results, such as a 50% reduction in errors for electronic health record (EHR) information retrieval tasks.

- Specialized Task Performance: After fine-tuning, the model achieves performance comparable to existing state-of-the-art specialized methods for tasks like pneumothorax classification and histopathology patch classification.

- General Capabilities: Importantly, MedGemma maintains the general capabilities of the Gemma 3 base models, preventing the common issue where specialized models perform poorly on non-medical tasks.

4. Getting Started: A Developer’s Guide

One of MedGemma’s core principles is accessibility. Developers can access the models through multiple channels and deploy them in various environments.

Accessing and Deploying the Models

The models are available from both Google Cloud’s Model Garden and Hugging Face. Developers have two primary deployment options:

- Local Deployment: For experimentation and smaller-scale applications, models can be run locally on a GPU. This requires the

transformerslibrary (version 4.50.0 or newer). - Cloud Deployment with Vertex AI: For scalable, production-grade applications, Google recommends deploying via Vertex AI. A typical deployment for the 4B model involves selecting the

g2-standard-24machine type with anNVIDIA_L4accelerator in a region likeus-central1.

Example: Running MedGemma 4B Locally

The following Python snippet demonstrates how to run the instruction-tuned 4B model locally using the Hugging Face transformers library to analyze a chest X-ray.

# First, ensure you have the necessary libraries

# pip install -U transformers torch Pillow requests accelerate

from transformers import AutoProcessor, AutoModelForImageTextToText

from PIL import Image

import requests

import torch

model_id = "google/medgemma-4b-it"

model = AutoModelForImageTextToText.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

)

processor = AutoProcessor.from_pretrained(model_id)

# Load an image

image_url = "https://upload.wikimedia.org/wikipedia/commons/c/c8/Chest_Xray_PA_3-8-2010.png"

image = Image.open(requests.get(image_url, stream=True).raw)

# Create the prompt

messages = [

{

"role": "system",

"content": [{"type": "text", "text": "You are an expert radiologist."}]

},

{

"role": "user",

"content": [

{"type": "text", "text": "Describe this X-ray"},

{"type": "image", "image": image}

]

}

]

inputs = processor.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

# Generate a response

generation = model.generate(**inputs, max_new_tokens=200, do_sample=False)

decoded_text = processor.decode(generation[0], skip_special_tokens=True)

print(decoded_text)

This code is adapted from the official MedGemma model card.

5. Limitations and Ethical Considerations

While MedGemma represents a significant advancement, it is crucial to understand its limitations and the ethical responsibilities that come with its use. Google is explicit that MedGemma is a foundational tool for developers, not a clinically validated medical device.

Known Limitations and Performance Gaps

- Not for Direct Clinical Use: The models are not approved for direct patient diagnosis or treatment and require extensive validation and fine-tuning for any specific clinical use case.

- Potential for Errors: Early testing has shown that the models can miss findings. In one widely cited case, clinician Vikas Gaur reported that MedGemma 4B failed to identify clear signs of tuberculosis on a chest X-ray, labeling it as normal. This highlights the critical need for human oversight and further specialized training.

- Evaluation Scope: The models have not been formally evaluated for multi-turn conversations or for processing multiple images in a single prompt, which may limit their use in complex, interactive diagnostic scenarios.

Ethical Responsibilities

The use of powerful AI in healthcare raises important ethical questions that developers must address:

- Data Bias: The model’s performance is dependent on its training data. If the data lacks diversity across ethnicities, age groups, or rare diseases, the model could produce biased or inequitable outputs. Developers must be vigilant about testing for and mitigating bias.

- Patient Consent and Transparency: Ethical issues arise around patient consent and awareness. Patients should be informed when AI is involved in their care pathway to maintain trust and autonomy.

- Accountability: As a foundational model, MedGemma places the responsibility squarely on the developers building downstream applications to ensure their products are safe, effective, and compliant with regulations like HIPAA.

6. MedGemma in the 2025 AI Healthcare Landscape

MedGemma did not emerge in a vacuum. Its release is a key part of the broader AI trends shaping healthcare in 2025. While tools like Aidoc focus on real-time alerts and IBM Watson Health on clinical decision support, MedGemma’s primary contribution is as an open, foundational layer that can power a new generation of such tools.

It directly enables several key 2025 healthcare trends identified by industry analysts:

- AI-Enhanced Medical Imaging: MedGemma provides the engine for building next-generation tools that assist radiologists with greater accuracy and speed.

- Personalized and Precision Medicine: The 27B multimodal model’s ability to integrate imaging, text, and EHR data is a step toward creating truly personalized treatment plans.

- AI in Drug Discovery: The text models can accelerate research by rapidly summarizing and synthesizing vast amounts of medical literature.

By providing open access to these powerful capabilities, Google is empowering a wider community of researchers and developers to innovate, accelerating the cycle of development and discovery in a field that has historically been dominated by closed, proprietary systems.

Conclusion: A Foundation for the Future

As of September 2025, MedGemma stands as a landmark release in healthcare AI. It represents a democratization of cutting-edge medical AI, providing developers with a powerful, transparent, and adaptable foundation. While the path to clinical integration is paved with challenges of validation, regulation, and ethical diligence, MedGemma provides the essential building blocks.

Its true impact will be measured not by its out-of-the-box performance, but by the innovative, safe, and effective applications that the healthcare community builds upon it. As one developer noted, “The future of healthcare won’t be built in silos. It’ll be built in open collaboration—with models like MedGemma leading the way.” This collaborative spirit is perhaps MedGemma’s most important contribution to the future of medicine.